NoOps means developers can code and let a service deploy, manage and scale their code

I don't want to "do ops"

I want to change the system by landing commits

If I have to use my root access, it's a bug

Every commit is fully integration tested (twice) before landing

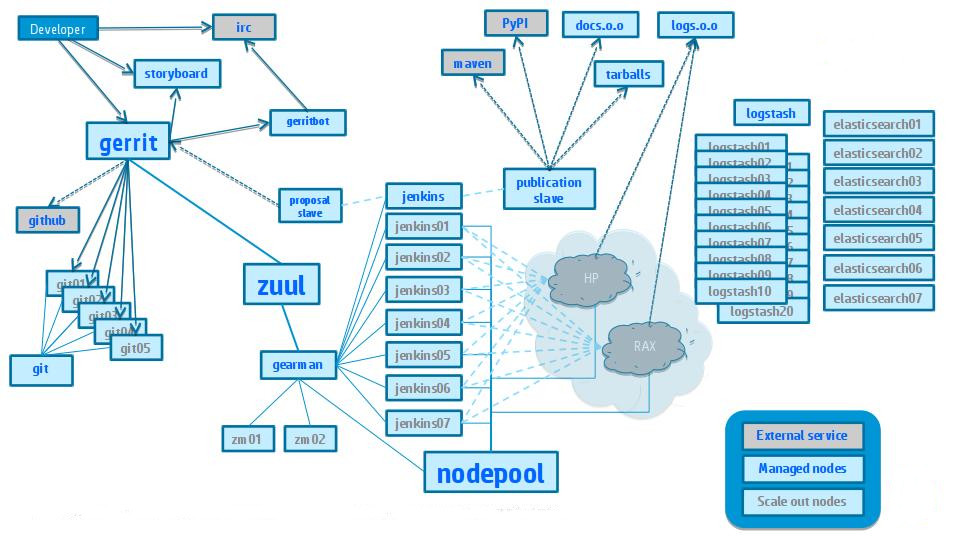

This is that "cloud scale out" part

It all runs across HP and Rackspace Public Clouds.

package { 'git':

ensure => 'present',

}

if !defined(Package['git']) {

package { 'git':

ensure => 'present',

}

}

define user::virtual::localuser(

$realname,

$groups = [ 'sudo', 'admin', ],

$sshkeys = '',

$key_id = '',

$old_keys = [],

$shell = '/bin/bash',

$home = "/home/${title}",

$managehome = true

) {

group { $title:

ensure => present,

}

user { $title:

ensure => present,

comment => $realname,

gid => $title,

groups => $groups,

home => $home,

managehome => $managehome,

membership => 'minimum',

shell => $shell,

require => Group[$title],

}

ssh_authorized_key { $key_id:

ensure => present,

key => $sshkeys,

user => $title,

type => 'ssh-rsa',

}

if ( $old_keys != [] ) {

ssh_authorized_key { $old_keys:

ensure => absent,

user => $title,

}

}

}

node default {

class { 'openstack_project::server':

sysadmins => hiera('sysadmins', []),

}

}

Breaks ability to use simple puppet apply

ansible '*' -m shell -p uptime

- hosts: '*.slave.openstack.org'

tasks:

- shell: 'rm -rf ~jenkins/workspace/*{{ project }}*'

That's executed:

ansible-playbook -f 10 /etc/ansible/clean_workspaces.yaml --extra-vars "project=$PROJECTNAME"

def main():

module = AnsibleModule(argument_spec=dict(

timeout=dict(default="30m"),

puppetmaster=dict(required=True),

show_diff=dict(default=False, aliases=['show-diff'], type='bool'),

))

p = module.params

puppet_cmd = module.get_bin_path("puppet", False)

if not puppet_cmd:

module.fail_json(msg="Could not find puppet. Please ensure it is installed.")

cmd = ("timeout -s 9 %(timeout)s %(puppet_cmd)s agent --onetime"

" --server %(puppetmaster)s"

" --ignorecache --no-daemonize --no-usecacheonfailure --no-splay"

" --detailed-exitcodes --verbose") % dict(

timeout=pipes.quote(p['timeout']), puppet_cmd=PUPPET_CMD,

puppetmaster=pipes.quote(p['puppetmaster']))

if p['show_diff']:

cmd += " --show-diff"

rc, stdout, stderr = module.run_command(cmd)

if rc == 0: # success

module.exit_json(rc=rc, changed=False, stdout=stdout)

elif rc == 1:

# rc==1 could be because it's disabled OR there was a compilation failure

disabled = "administratively disabled" in stdout

if disabled:

msg = "puppet is disabled"

else:

msg = "puppet compilation failed"

module.fail_json(rc=rc, disabled=disabled, msg=msg, stdout=stdout, stderr=stderr)

elif rc == 2: # success with changes

module.exit_json(changed=True)

elif rc == 124: # timeout

module.exit_json(rc=rc, msg="%s timed out" % cmd, stdout=stdout, stderr=stderr)

else: # failure

module.fail_json(rc=rc, msg="%s failed" % (cmd), stdout=stdout, stderr=stderr)

- name: run puppet

puppet:

puppetmaster: "{{puppetmaster}}"

roles/puppet/tasks/main.yml

- hosts: git0*

gather_facts: false

max_fail_percentage: 1

roles:

- { role: puppet, puppetmaster: puppetmaster.openstack.org }

- hosts: review.openstack.org

gather_facts: false

roles:

- { role: puppet, puppetmaster: puppetmaster.openstack.org }

- hosts: "!review.openstack.org:!git0*:!afs*"

gather_facts: false

roles:

- { role: puppet, puppetmaster: puppetmaster.openstack.org }

review.openstack.org

git01.openstack.org

git02.openstack.org

pypi.dfw.openstack.org

pypi.iad.openstack.org

[pypi]

pypi.dfw.openstack.org

pypi.iad.openstack.org

[git]

git01.openstack.org

git02.openstack.org

import json

import subprocess

output = [

x.split()[1][1:-1] for x in subprocess.check_output(

["puppet","cert","list","-a"]).split('\n')

if x.startswith('+')

]

data = {

'_meta': {'hostvars': dict()},

'ungrouped': output,

}

print json.dumps(data, sort_keys=True, indent=2)

pypi.dfw.openstack.org:

image_name: Ubuntu 12.04.4

flavor_ram: 2048

region: DFW

cloud: rackspace

volumes:

- size: 200

mount: /srv

pypi.region-b.geo-1.openstack.org:

image_name: Ubuntu 12.04.4

flavor_ram: 2048

region: region-b.geo-1

cloud: hp

volumes:

- size: 200

mount: /srv

pypi:

image_name: Ubuntu 12.04.4

flavor_ram: 2048

volumes:

- size: 200

mount: /srv

hosts:

pypi.dfw:

region: DFW

pypi.iad:

region: IAD

pypi.ord:

region: ORD

pypi.region-b.geo-1:

cloud: hp

---

- name: Launch Node

os_compute:

cloud: "{{ cloud }}"

region_name: "{{ region_name }}"

name: "{{ name }}"

image_name: "{{ image_name }}"

flavor_ram: "{{ flavor_ram }}"

flavor_include: "{{ flavor_include }}"

meta:

group: "{{ group }}"

key_name: "{{ launch_keypair }}"

register: node

- name: Create volumes

os_volume:

cloud: "{{ cloud }}"

size: "{{ item.size }}"

display_name: "{{ item.display_name }}"

with_items: volumes

- name: Attach volumes

os_compute_volume:

cloud: "{{ cloud }}"

server_id: "{{ node.id }}"

volume_name: "{{ item.display_name }}"

with_items: volumes

register: attached_volumes

- debug: var=attached_volumes

- name: Re-request server to get up to date metadata after the volume loop

os_compute_facts:

cloud: "{{ cloud }}"

name: "{{ name }}"

when: attached_volumes.changed

- name: Wait for SSH to work

wait_for: host={{ node.openstack.interface_ip }} port=22

when: node.changed == True

- name: Add SSH host key to known hosts

shell: ssh-keyscan "{{ node.openstack.interface_ip|quote }}" >> ~/.ssh/known_hosts

when: node.changed == True

- name: Add all instance public IPs to host group

add_host:

name: "{{ node.openstack.interface_ip }}"

groups: "{{ provision_group }}"

openstack: "{{ node.openstack }}"

when: attached_volumes|length == 0

- name: Add all instance public IPs to host and volumes group

add_host:

name: "{{ node.openstack.interface_ip }}"

groups: "{{ provision_group }},hasvolumes"

openstack: "{{ node.openstack }}"

when: attached_volumes|length != 0

"pypi.dfw.openstack.org": {

"ansible_ssh_host": "23.253.237.8",

"openstack": {

"HUMAN_ID": true,

"NAME_ATTR": "name",

"OS-DCF:diskConfig": "MANUAL",

"OS-EXT-STS:power_state": 1,

"OS-EXT-STS:task_state": null,

"OS-EXT-STS:vm_state": "active",

"accessIPv4": "23.253.237.8",

"accessIPv6": "2001:4800:7817:104:d256:7a33:5187:7e1b",

"addresses": {

"private": [

{

"addr": "10.208.195.50",

"version": 4

}

],

"public": [

{

"addr": "23.253.237.8",

"version": 4

},

{

"addr": "2001:4800:7817:104:d256:7a33:5187:7e1b",

"version": 6

}

]

},

"cloud": "rax",

"config_drive": "",

"created": "2014-09-05T15:32:14Z",

"flavor": {

"id": "performance1-4",

"links": [

{

"href": "https://dfw.servers.api.rackspacecloud.com/610275/flavors/performance1-4",

"rel": "bookmark"

}

],

"name": "4 GB Performance"

},

"hostId": "adb603d4566efe0392756c76dab38ffcba22099368837c7973321e77",

"human_id": "pypidfwopenstackorg",

"id": "de672205-9245-46b6-b3df-489ccf9e0c17",

"image": {

"id": "928e709d-35f0-47eb-b296-d18e1b0a76b7",

"links": [

{

"href": "https://dfw.servers.api.rackspacecloud.com/610275/images/928e709d-35f0-47eb-b296-d18e1b0a76b7",

"rel": "bookmark"

}

]

},

"interface_ip": "23.253.237.8",

"key_name": "launch-node-root",

"links": [

{

"href": "https://dfw.servers.api.rackspacecloud.com/v2/610275/servers/de672205-9245-46b6-b3df-489ccf9e0c17",

"rel": "self"

},

{

"href": "https://dfw.servers.api.rackspacecloud.com/610275/servers/de672205-9245-46b6-b3df-489ccf9e0c17",

"rel": "bookmark"

}

],

"metadata": {},

"name": "pypi.dfw.openstack.org",

"networks": {

"private": [

"10.208.195.50"

],

"public": [

"23.253.237.8",

"2001:4800:7817:104:d256:7a33:5187:7e1b"

]

},

"progress": 100,

"region": "DFW",

"status": "ACTIVE",

"tenant_id": "610275",

"updated": "2014-09-05T15:32:49Z",

"user_id": "156284",

"volumes": [

{

"HUMAN_ID": false,

"NAME_ATTR": "name",

"attachments": [

{

"device": "/dev/xvdb",

"host_name": null,

"id": "c6f5229c-1cc0-47c4-aab7-60db1f6cf8e8",

"server_id": "de672205-9245-46b6-b3df-489ccf9e0c17",

"volume_id": "c6f5229c-1cc0-47c4-aab7-60db1f6cf8e8"

}

],

"availability_zone": "nova",

"bootable": "false",

"created_at": "2014-09-05T14:37:42.000000",

"device": "/dev/xvdb",

"display_description": null,

"display_name": "pypi.dfw.openstack.org/main01",

"human_id": null,

"id": "c6f5229c-1cc0-47c4-aab7-60db1f6cf8e8",

"metadata": {

"readonly": "False",

"storage-node": "1845027a-5e07-47a1-9572-3eea4716f726"

},

"os-vol-tenant-attr:tenant_id": "610275",

"size": 200,

"snapshot_id": null,

"source_volid": null,

"status": "in-use",

"volume_type": "SATA"

}

]

}

},

ansible can just pass secrets to puppet apply as parameters

but that's another talk

http://inaugust.com/talks/ansible-cloud.html